Customer service costs dropped 67% at companies using RAG systems that instantly retrieve accurate information from knowledge bases instead of forcing representatives to search manually through hundreds of documents. Retrieval-Augmented Generation solves AI’s biggest weakness—hallucination—by grounding responses in real, verifiable data rather than generated guesses. This breakthrough is why enterprise AI adoption jumped from 23% to 78% in just two years. (Learn more in our guide: What is AI Hallucination?)

If you’ve been following AI developments, you’ve probably heard “RAG” mentioned alongside ChatGPT

This guide breaks down RAG in plain English — what it is, how it works, why it matters, and which tools use it.

Quick Summary

RAG (Retrieval-Augmented Generation) is an AI architecture that improves large language model (LLM) responses by first retrieving relevant information from external sources, then using that information to generate more accurate, up-to-date answers.

Think of it this way: instead of asking someone to answer questions purely from memory (which might be outdated or incomplete), RAG lets the AI check its notes first.

| Aspect | Standard LLM | RAG-Enhanced LLM |

|---|---|---|

| Knowledge Source | Training data only | Training data + external databases |

| Data Freshness | Frozen at training cutoff | Real-time or regularly updated |

| Accuracy | Prone to hallucinations | Grounded in retrieved sources |

| Citations | Can’t cite sources | Can reference specific documents |

| Customization | Requires fine-tuning | Uses existing knowledge bases |

Table of Contents

- What is RAG?

- How Does RAG Work?

- Why RAG Matters

- Benefits of RAG

- RAG vs Fine-Tuning

- Common RAG Applications

- Popular RAG Tools and Frameworks

- Limitations of RAG

- The Future of RAG

- FAQs

What is RAG?

Retrieval-Augmented Generation (RAG) is an AI framework introduced by Facebook AI Research (now Meta AI) in 2020. It combines two powerful capabilities:

- Information Retrieval — Finding relevant documents or data from external sources

- Text Generation — Using an LLM to generate human-like responses

The key innovation? Rather than relying solely on what an AI model learned during training, RAG systems fetch relevant information from knowledge bases at the time of the query and include it in the response generation process.

The Problem RAG Solves

Large language models like GPT-4 and Claude are trained on massive datasets, but this training has limitations:

- Knowledge Cutoff: Training data has a specific end date. A model trained in 2024 doesn’t know what happened in 2025.

- No Access to Private Data: LLMs can’t access your company’s internal documents, customer data, or proprietary research.

- Hallucinations: When LLMs don’t know something, they sometimes make things up — confidently.

- No Citations: Standard LLMs can’t tell you where they got their information.

RAG addresses all of these problems by connecting the LLM to external, updateable knowledge sources.

How Does RAG Work?

RAG operates in four main stages:

1. Create External Knowledge Base

First, you need a searchable collection of information. This could be:

- Company documents (PDFs, Word docs, spreadsheets)

- Product databases

- Research papers

- Website Content

- FAQs and support tickets

- Real-time data feeds

This data is processed using embedding models that convert text into numerical vectors (essentially, mathematical representations of meaning). These vectors are stored in a vector database designed for fast similarity searches.

2. Retrieve Relevant Information

When a user asks a question:

- The query is converted into a vector using the same embedding model

- The system searches the vector database for the most similar content

- The top matching documents or passages are retrieved

For example, if an employee asks “What’s our parental leave policy?”, the system finds and retrieves the HR policy documents about parental leave.

3. Augment the Prompt

The retrieved information is combined with the user’s original question to create an enriched prompt for the LLM. This is where “augmented” comes from — the prompt is augmented with relevant context.

The augmented prompt might look like:

Context: [Retrieved policy documents about parental leave]

Question: What's our parental leave policy?

Instructions: Answer based on the provided context. If the answer isn't in the context, say so.

4. Generate Response

The LLM generates a response using both its general knowledge AND the specific retrieved information. Because the relevant documents are right there in the prompt, the model can:

- Give accurate, specific answers

- Quote exact policies or figures

- Cite its sources

- Admit when information isn’t available

The RAG Pipeline Visualized

User Question

↓

[Embedding Model] → Query Vector

↓

[Vector Database Search] → Relevant Documents

↓

[Combine: Question + Context]

↓

[LLM Generation]

↓

Grounded Response with Citations

Why RAG Matters

RAG has become the dominant paradigm for enterprise AI applications for several reasons:

1. It Makes AI Actually Useful for Business

Generic AI chatbots are impressive but limited. A customer service bot needs to know your products, your policies, your FAQs — not generic information. RAG enables this without expensive model retraining.

2. It Dramatically Reduces Hallucinations

By grounding responses in retrieved documents, RAG systems have a “source of truth” to reference. Studies show RAG can reduce hallucination rates by 30-50% compared to standard LLM responses.

3. It Keeps AI Current

Training an LLM is a snapshot in time. RAG systems can update their knowledge bases daily, hourly, or even in real-time. The AI always has access to the latest information.

4. It’s Cost-Effective

Fine-tuning or retraining large models costs significant compute resources. RAG achieves similar domain-specific performance by simply updating documents in a database — no model changes required.

5. It Enables Accountability

When a RAG system cites its sources, users can verify the information. This transparency is crucial for enterprise adoption, especially in regulated industries like healthcare and finance.

Benefits of RAG

Cost-Efficient AI Implementation

Fine-tuning a large model can cost thousands to hundreds of thousands of dollars in compute. RAG lets you customize AI behavior by simply uploading documents. The underlying model stays the same.

Real-Time Knowledge Access

Connect your RAG system to APIs, databases, or web scrapers. Need current stock prices? Live customer data? Breaking news? RAG can retrieve it.

Lower Hallucination Risk

RAG doesn’t eliminate hallucinations, but it significantly reduces them. The model has specific source material to reference rather than generating from potentially faulty “memory.”

Increased User Trust

Responses that include citations like “According to Policy Document 3.2.1…” are more trustworthy than “I believe the policy is…” Users can verify claims.

Data Privacy and Security

With RAG, sensitive data stays in your own systems. The LLM never “learns” or stores your private information — it just references it at query time.

Easier Maintenance

Need to update your AI’s knowledge? Update the documents. No model retraining required. This makes keeping AI systems current vastly simpler.

RAG vs Fine-Tuning

Two main approaches exist for customizing LLM behavior: RAG and fine-tuning. Here’s how they compare:

| Factor | RAG | Fine-Tuning |

|---|---|---|

| Cost | Low (just documents) | High (compute-intensive) |

| Setup Time | Hours to days | Days to weeks |

| Knowledge Updates | Easy (update docs) | Hard (retrain model) |

| Data Privacy | Data stays external | Data baked into model |

| Hallucination Risk | Lower (grounded) | Medium |

| Best For | Factual Q&A, support | Style, tone, specialized tasks |

| Requires ML Expertise | Minimal | Significant |

When to Use RAG

- Customer support bots that need product knowledge

- Internal knowledge assistants

- Document search and summarization

- Research assistants

- Any application requiring current information

When to Use Fine-Tuning

- Changing the model’s writing style

- Teaching domain-specific terminology

- Improving performance on specialized tasks

- When you need changes “baked in” to the model

Hybrid Approach

Many production systems use both: fine-tuning for style and behavior, RAG for knowledge. This gives you the best of both worlds.

Common RAG Applications

Enterprise Knowledge Management

Companies use RAG to make internal knowledge searchable and accessible. Instead of employees digging through SharePoint or Confluence, they ask an AI assistant that retrieves relevant documents instantly.

Example: “What was the Q3 revenue for the APAC region?” → RAG retrieves financial reports and answers accurately.

Customer Support Chatbots

RAG-powered support bots can answer product questions, troubleshoot issues, and guide users through processes using your actual documentation.

Example: “How do I reset my password?” → RAG retrieves the specific password reset guide for your platform.

Legal and Compliance

Law firms and compliance teams use RAG to search case law, contracts, and regulatory documents. The AI can find relevant precedents or flag compliance issues.

Example: “What GDPR requirements apply to customer data retention?” → RAG retrieves relevant regulations and internal policies.

Healthcare

Medical professionals use RAG systems to search clinical guidelines, drug interactions, and research literature while keeping patient data secure.

Example: “What are the contraindications for this medication combination?” → RAG retrieves from pharmaceutical databases.

Research and Academia

Researchers use RAG to search across papers, summarize findings, and identify relevant studies from massive corpuses of academic literature.

Example: “What recent studies address RAG for medical applications?” → RAG searches and summarizes relevant papers.

Code Documentation

Developer tools use RAG to search codebases, documentation, and Stack Overflow-style knowledge bases to help programmers.

Example: “How do I authenticate with our internal API?” → RAG retrieves the relevant API documentation.

Popular RAG Tools and Frameworks

Vector Databases

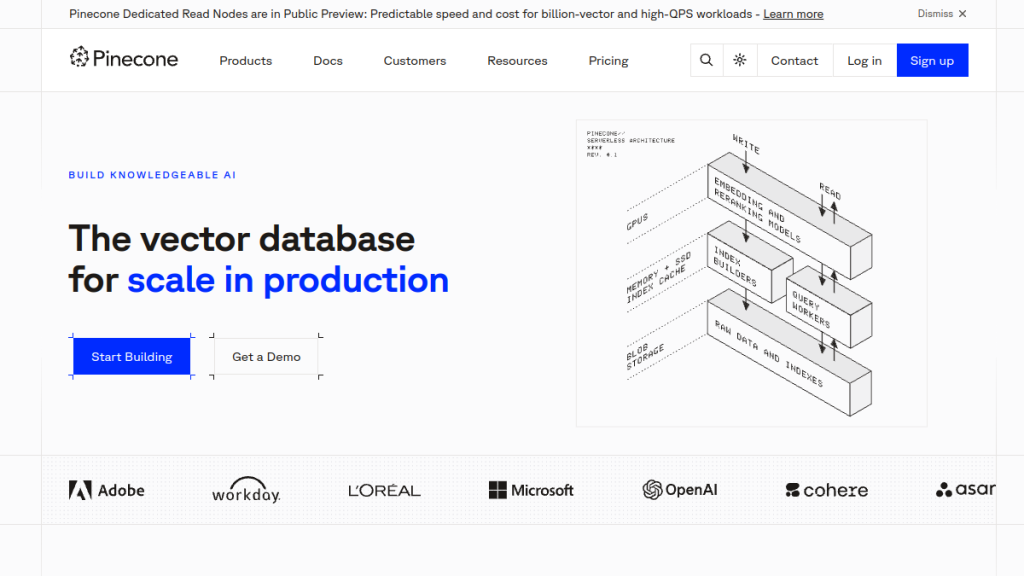

These specialized databases store and search vector embeddings efficiently:

- Pinecone — Managed vector database, easy to use, enterprise-ready

- Weaviate — Open-source, supports hybrid search

- Milvus — Open-source, highly scalable

- Qdrant — Open-source, Rust-based, fast

- Chroma — Simple, developer-friendly, open-source

- pgvector — PostgreSQL extension for vector search

RAG Frameworks

These tools help you build RAG applications:

- LangChain — Most popular framework, supports multiple LLMs and databases

- LlamaIndex — Focused on data ingestion and retrieval

- Haystack — Open-source, production-ready framework

- Semantic Kernel — Microsoft’s framework for AI orchestration

All-in-One Platforms

These provide complete RAG solutions out of the box:

- OpenAI Assistants API — RAG built into the OpenAI platform

- Amazon Bedrock — AWS managed service with RAG capabilities

- Google Vertex AI — Google Cloud’s RAG-enabled platform

- Azure AI Search — Microsoft’s enterprise RAG solution

- Cohere — Focused on enterprise RAG with strong retrieval

Embedding Models

These convert text to vectors:

- OpenAI text-embedding-3 — High-quality, widely used

- Cohere Embed — Multilingual support

- Sentence Transformers — Open-source, many variants

- Voyage AI — Specialized for RAG applications

- Jina Embeddings — Long-context capable

Limitations of RAG

RAG isn’t perfect. Understanding its limitations helps you use it effectively:

Quality Depends on Data Quality

RAG can only retrieve what’s in your knowledge base. Incomplete, outdated, or poorly organized documents lead to poor answers.

Retrieval Failures

Sometimes the right information exists but isn’t retrieved. Semantic search isn’t perfect — relevant documents might use different terminology than the query.

Context Window Limits

LLMs have maximum context sizes. If too many documents are retrieved, they need to be truncated or summarized, potentially losing important details.

Doesn’t Eliminate Hallucinations

RAG reduces hallucinations but doesn’t eliminate them. The model might still hallucinate when:

- Retrieved information is ambiguous

- The query isn’t well covered by the knowledge base

- Multiple conflicting sources are retrieved

Latency

RAG adds retrieval time to every query. While typically milliseconds, this can add up for applications requiring real-time responses.

Complexity

Building and maintaining a RAG pipeline adds engineering complexity. You need to manage embeddings, vector databases, chunking strategies, and prompt engineering.

The Future of RAG

RAG continues evolving rapidly. Key trends for 2026 and beyond:

Agentic RAG

RAG systems that don’t just retrieve and generate, but take actions: updating databases, triggering workflows, and interacting with external systems.

Multi-Modal RAG

Retrieval across images, videos, audio, and text. Ask a question and get relevant content from any format.

Long RAG

New approaches that process longer retrieval units (entire documents instead of small chunks), improving context and coherence.

Self-Correcting RAG

Systems that verify their own responses against retrieved sources and flag inconsistencies.

Hybrid Retrieval

Combining semantic search with traditional keyword search and structured queries for better precision and recall.

FAQs

Is RAG the same as giving an AI access to the internet?

Not quite. Internet access gives broad but uncontrolled information access. RAG connects AI to specific, curated knowledge bases that you control. This is crucial for accuracy, privacy, and security.

Can I use RAG with any LLM?

Yes. RAG is architecture-agnostic. It works with GPT-4, Claude, Llama, Mistral, and any other LLM. The retrieval component is separate from the generation component.

How much data do I need for RAG?

There’s no minimum. RAG can work with a single document or millions. Start small and expand as needed.

Does RAG make AI responses slower?

Slightly. Retrieval adds latency (typically 50-200ms). For most applications, this is negligible. For real-time applications, optimization may be needed.

Is RAG secure for sensitive data?

RAG can be very secure because your data stays in your own systems. The LLM processes the data but doesn’t store or learn from it. Always follow security best practices for your specific deployment.

What’s the difference between RAG and semantic search?

Semantic search finds relevant documents. RAG uses semantic search as part of a larger pipeline that then generates natural language responses from those documents.

Can RAG cite its sources?

Yes, this is a key feature. Well-implemented RAG systems include citations pointing to specific documents or passages that informed the response.

For a different approach to AI-assisted development, see our Qodo 2.1 review — it focuses on AI-powered code review and quality standards rather than code generation.

Conclusion

🏆 Our Verdict

RAG has fundamentally changed how organizations deploy AI. By bridging the gap between powerful language models and domain-specific knowledge, it enables AI applications that are accurate, current, and trustworthy.

Whether you’re building a customer support bot, internal knowledge assistant, or specialized research tool, understanding RAG is essential for modern AI development.

The technology continues evolving rapidly — expect more sophisticated retrieval strategies, better integration with enterprise systems, and increasingly capable multi-modal applications.

Ready to explore AI tools that leverage RAG? Check out our reviews of AI writing tools, AI coding assistants, and AI productivity tools — many of which use RAG architectures to deliver accurate, context-aware results.